DETR论文阅读梳理

本文最后更新于 2024-08-16,文章内容可能已经过时。

创新点

端到端: 去除NMS和anchor,没有那么多的超参,计算量也大大减少,整个网络变得很简单。

提出一种全新的基于集合的损失函数: 通过二分图匹配的方法强制模型输出一组独一无二的预测框,每个物体只会产生一个预测框,这样就将目标检测问题直接转换为集合预测的问题,所以才不用nms,达到端到端的效果。

在decoder输入一组可学习的object query和encoder输出的全局上下文特征,直接以并行方式强制输出最终的100个预测框,替代了anchor。

缺点: 对大物体的检测效果很好,但是对小物体的检测效果不好; 训练起来比较慢;

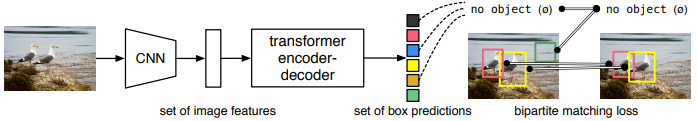

整体架构

图片输入,首先经过一个CNN网络提取图片的局部特征;

再把特征拉直,输入Transformer Encoder中,进一步学习这个特征的全局信息。经过Encoder后就可以计算出没一个点或者没一个特征和这个图片的其他特征的相关性;

再把Encoder的输出送入Decoder中,并且这里还要输入Object Query,限制解码出100个框,这一步作用就是生成100个预测框;

预测出的100个框和gt框,通过二分图匹配的方式,确定其中哪些预测框是有物体的,哪些是没有物体的(背景),再把有物体的框和gt框一起计算分类损失和回归损失;推理的时候更简单,直接对decoder中生成的100个预测框设置一个置信度阈值(0.7),大于的保留,小于的抑制;

注意: 每个decoder都会做一次object query的自注意力操作,第一个decoder可以不做,这主要是为了移除冗余框;为了让模型训练的更快更稳定,所在在Decoder后面加了很多的auxiliary loss, 不光在最后一层decoder中计算loss,在之前的decoder中也计算loss)

问题

为什么ViT只有Encoder,而DETR要用Encoder+Decoder?(从论文实验部分得出结论)

答: Encoder:Encoder自注意力主要进行全局建模,学习全局的特征,通过这一步其实已经基本可以把图片中的各个物体尽可能的分开;

Decoder:这个时候再使用Decoder自注意力,再做目标检测和分割任务,模型就可以进一步把物体的边界的极值点区域进行一个更进一步精确的划分,让边缘的识别更加精确;

object query有什么用?

答: object query是用来替换anchor的,通过引入可学习的object query,可以让模型自动的去学习图片当中哪些区域是可能有物体的,最终通过object query可以找到100个这种可能有物体的区域。再后面通过二分图匹配的方式找到100个预测框中有效的预测框,进而计算损失即可。所以说object query就起到了替换anchor的作用,以可学习的方式找到可能有物体的区域,而不会因为使用anchor而造成大量的冗余框。

为什么decoder中定义的物体信息tgt,初始化为全0,物体位置信息query_pos, 随机初始化,但是可以表示这么复杂的含义呢?它明明是初始化为全0或随机初始化的,模型怎么知道他们代表的含义?

答:这其实和损失函数有关了,损失函数定义好了,通过计算损失,梯度回传,网络不断的学习,最终学习得到的tgt和query_pos就是这里表示的含义。这就和回归损失一样的,定义好了这四个channel代表x,y,w,h,那网络怎么知道的?就是通过损失函数梯度回传,网络不断学习,最终知道这四个channel就是代表x,y,w,h

为什么这里要将tgt1+tgt2作为decoder的输出呢?不是单独的用tgt1或者tgt2呢?

答: 1. 首先tgt1代表图像中的物体信息+物体的位置信息,但是他没有太多的图像特征,这是不行的,最后预测效果 肯定不好(预测物体类别肯定不是很准)

2.其次tgt2代表的encoder增强版的图像特征+物体位置信息,它缺少了物体的信息,这也是不行的,最后的预测效果肯定也不好(预测物体位置肯定不是很准)

所以两者相加的特征作为decoder的输出,去预测物体的类别和位置,效果最好。

DETR源代码

class DETR(nn.Module):

""" This is the DETR module that performs object detection """

def __init__(self, backbone, transformer, num_classes, num_queries, aux_loss=False):

""" Initializes the model.

Parameters:

backbone: torch module of the backbone to be used. See backbone.py

transformer: torch module of the transformer architecture. See transformer.py

num_classes: number of object classes

num_queries: number of object queries, ie detection slot. This is the maximal number of objects

DETR can detect in a single image. For COCO, we recommend 100 queries.

aux_loss: True if auxiliary decoding losses (loss at each decoder layer) are to be used.

"""

super().__init__()

self.num_queries = num_queries

self.transformer = transformer

hidden_dim = transformer.d_model

# 分类

self.class_embed = nn.Linear(hidden_dim, num_classes + 1)

# 回归

self.bbox_embed = MLP(hidden_dim, hidden_dim, 4, 3)

# self.query_embed 类似于传统目标检测里面的anchor 这里设置了100个 [100,256]

# nn.Embedding 等价于 nn.Parameter

self.query_embed = nn.Embedding(num_queries, hidden_dim)

self.input_proj = nn.Conv2d(backbone.num_channels, hidden_dim, kernel_size=1)

self.backbone = backbone

self.aux_loss = aux_loss # True

def forward(self, samples: NestedTensor):

""" The forward expects a NestedTensor, which consists of:

- samples.tensor: batched images, of shape [batch_size x 3 x H x W]

- samples.mask: a binary mask of shape [batch_size x H x W], containing 1 on padded pixels

It returns a dict with the following elements:

- "pred_logits": the classification logits (including no-object) for all queries.

Shape= [batch_size x num_queries x (num_classes + 1)]

- "pred_boxes": The normalized boxes coordinates for all queries, represented as

(center_x, center_y, height, width). These values are normalized in [0, 1],

relative to the size of each individual image (disregarding possible padding).

See PostProcess for information on how to retrieve the unnormalized bounding box.

- "aux_outputs": Optional, only returned when auxilary losses are activated. It is a list of

dictionnaries containing the two above keys for each decoder layer.

"""

if isinstance(samples, (list, torch.Tensor)):

samples = nested_tensor_from_tensor_list(samples)

# out: list{0: tensor=[bs,2048,19,26] + mask=[bs,19,26]} 经过backbone resnet50 block5输出的结果

# pos: list{0: [bs,256,19,26]} 位置编码

features, pos = self.backbone(samples)

# src: Tensor [bs,2048,19,26]

# mask: Tensor [bs,19,26]

src, mask = features[-1].decompose()

assert mask is not None

# 数据输入transformer进行前向传播

# self.input_proj(src) [bs,2048,19,26]->[bs,256,19,26]

# mask: False的区域是不需要进行注意力计算的

# self.query_embed.weight 类似于传统目标检测里面的anchor 这里设置了100个

# pos[-1] 位置编码 [bs, 256, 19, 26]

# hs: [6, bs, 100, 256]

hs = self.transformer(self.input_proj(src), mask, self.query_embed.weight, pos[-1])[0]

# 分类 [6个decoder, bs, 100, 256] -> [6, bs, 100, 92(类别)]

outputs_class = self.class_embed(hs)

# 回归 [6个decoder, bs, 100, 256] -> [6, bs, 100, 4]

outputs_coord = self.bbox_embed(hs).sigmoid()

out = {'pred_logits': outputs_class[-1], 'pred_boxes': outputs_coord[-1]}

if self.aux_loss: # True

out['aux_outputs'] = self._set_aux_loss(outputs_class, outputs_coord)

# dict: 3

# 0 pred_logits 分类头输出[bs, 100, 92(类别数)]

# 1 pred_boxes 回归头输出[bs, 100, 4]

# 3 aux_outputs list: 5 前5个decoder层输出 5个pred_logits[bs, 100, 92(类别数)] 和 5个pred_boxes[bs, 100, 4]

return out

@torch.jit.unused

def _set_aux_loss(self, outputs_class, outputs_coord):

# this is a workaround to make torchscript happy, as torchscript

# doesn't support dictionary with non-homogeneous values, such

# as a dict having both a Tensor and a list.

return [{'pred_logits': a, 'pred_boxes': b}

for a, b in zip(outputs_class[:-1], outputs_coord[:-1])]

transformer部分

class Transformer(nn.Module):

def __init__(self, d_model=512, nhead=8, num_encoder_layers=6,

num_decoder_layers=6, dim_feedforward=2048, dropout=0.1,

activation="relu", normalize_before=False,

return_intermediate_dec=False):

super().__init__()

"""

d_model: 编码器里面mlp(前馈神经网络 2个linear层)的hidden dim 512

nhead: 多头注意力头数 8

num_encoder_layers: encoder的层数 6

num_decoder_layers: decoder的层数 6

dim_feedforward: 前馈神经网络的维度 2048

dropout: 0.1

activation: 激活函数类型 relu

normalize_before: 是否使用前置LN

return_intermediate_dec: 是否返回decoder中间层结果 False

"""

# 初始化一个小encoder

encoder_layer = TransformerEncoderLayer(d_model, nhead, dim_feedforward,

dropout, activation, normalize_before)

encoder_norm = nn.LayerNorm(d_model) if normalize_before else None

# 创建整个Encoder层 6个encoder层堆叠

self.encoder = TransformerEncoder(encoder_layer, num_encoder_layers, encoder_norm)

# 初始化一个小decoder

decoder_layer = TransformerDecoderLayer(d_model, nhead, dim_feedforward,

dropout, activation, normalize_before)

decoder_norm = nn.LayerNorm(d_model)

# 创建整个Decoder层 6个decoder层堆叠

self.decoder = TransformerDecoder(decoder_layer, num_decoder_layers, decoder_norm,

return_intermediate=return_intermediate_dec)

# 参数初始化

self._reset_parameters()

self.d_model = d_model # 编码器里面mlp的hidden dim 512

self.nhead = nhead # 多头注意力头数 8

def _reset_parameters(self):

for p in self.parameters():

if p.dim() > 1:

nn.init.xavier_uniform_(p)

def forward(self, src, mask, query_embed, pos_embed):

"""

src: [bs,256,19,26] 图片输入backbone+1x1conv之后的特征图

mask: [bs, 19, 26] 用于记录特征图中哪些地方是填充的(原图部分值为False,填充部分值为True)

query_embed: [100, 256] 类似于传统目标检测里面的anchor 这里设置了100个 需要预测的目标

pos_embed: [bs, 256, 19, 26] 位置编码

"""

# bs c=256 h=19 w=26

bs, c, h, w = src.shape

# src: [bs,256,19,26]=[bs,C,H,W] -> [494,bs,256]=[HW,bs,C]

src = src.flatten(2).permute(2, 0, 1)

# pos_embed: [bs, 256, 19, 26]=[bs,C,H,W] -> [494,bs,256]=[HW,bs,C]

pos_embed = pos_embed.flatten(2).permute(2, 0, 1)

# query_embed: [100, 256]=[num,C] -> [100,bs,256]=[num,bs,256]

query_embed = query_embed.unsqueeze(1).repeat(1, bs, 1)

# mask: [bs, 19, 26]=[bs,H,W] -> [bs,494]=[bs,HW]

mask = mask.flatten(1)

# tgt: [100, bs, 256] 需要预测的目标query embedding 和 query_embed形状相同 且全设置为0

# 在每层decoder层中不断的被refine,相当于一次次的被coarse-to-fine的过程

tgt = torch.zeros_like(query_embed)

# memory: [494, bs, 256]=[HW, bs, 256] Encoder输出 具有全局相关性(增强后)的特征表示

memory = self.encoder(src, src_key_padding_mask=mask, pos=pos_embed)

# [6, 100, bs, 256]

# tgt:需要预测的目标 query embeding

# memory: encoder的输出

# pos: memory的位置编码

# query_pos: tgt的位置编码

hs = self.decoder(tgt, memory, memory_key_padding_mask=mask,

pos=pos_embed, query_pos=query_embed)

# decoder输出 [6, 100, bs, 256] -> [6, bs, 100, 256]

# encoder输出 [bs, 256, H, W]

return hs.transpose(1, 2), memory.permute(1, 2, 0).view(bs, c, h, w)

tranformer encoder源代码

class TransformerEncoderLayer(nn.Module):

def __init__(self, d_model, nhead, dim_feedforward=2048, dropout=0.1,

activation="relu", normalize_before=False):

super().__init__()

"""

小encoder层 结构:multi-head Attention + add&Norm + feed forward + add&Norm

d_model: mlp 前馈神经网络的dim

nhead: 8头注意力机制

dim_feedforward: 前馈神经网络的维度 2048

dropout: 0.1

activation: 激活函数类型

normalize_before: 是否使用先LN False

"""

self.self_attn = nn.MultiheadAttention(d_model, nhead, dropout=dropout)

# Implementation of Feedforward model

self.linear1 = nn.Linear(d_model, dim_feedforward)

self.dropout = nn.Dropout(dropout)

self.linear2 = nn.Linear(dim_feedforward, d_model)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.dropout1 = nn.Dropout(dropout)

self.dropout2 = nn.Dropout(dropout)

self.activation = _get_activation_fn(activation)

self.normalize_before = normalize_before

def with_pos_embed(self, tensor, pos: Optional[Tensor]):

# 这个操作是把词向量和位置编码相加操作

return tensor if pos is None else tensor + pos

def forward_post(self,

src,

src_mask: Optional[Tensor] = None,

src_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None):

"""

src: [494, bs, 256] backbone输入下采样32倍后 再 压缩维度到256的特征图

src_mask: None

src_key_padding_mask: [bs, 494] 记录哪些位置有pad True 没意义 不需要计算attention

pos: [494, bs, 256] 位置编码

"""

# 数据 + 位置编码 [494, bs, 256]

# 这也是和原版encoder不同的地方,这里每个encoder的q和k都会加上位置编码 再用q和k计算相似度 再和v加权得到更具有全局相关性(增强后)的特征表示

# 每用一层都加上位置编码 信息不断加强 最终得到的特征全局相关性最强 原版的transformer只在输入加上位置编码 作者发现这样更好

q = k = self.with_pos_embed(src, pos)

# multi-head attention [494, bs, 256]

# q 和 k = backbone输出特征图 + 位置编码

# v = backbone输出特征图

# 这里对query和key增加位置编码 是因为需要在图像特征中各个位置之间计算相似度/相关性 而value作为原图像的特征 和 相关性矩阵加权,

# 从而得到各个位置结合了全局相关性(增强后)的特征表示,所以q 和 k这种计算需要+位置编码 而v代表原图像不需要加位置编码

# nn.MultiheadAttention: 返回两个值 第一个是自注意力层的输出 第二个是自注意力权重 这里取0

# key_padding_mask: 记录backbone生成的特征图中哪些是原始图像pad的部分 这部分是没有意义的

# 计算注意力会被填充为-inf,这样最终生成注意力经过softmax时输出就趋向于0,相当于忽略不计

# attn_mask: 是在Transformer中用来“防作弊”的,即遮住当前预测位置之后的位置,忽略这些位置,不计算与其相关的注意力权重

# 而在encoder中通常为None 不适用 decoder中才使用

src2 = self.self_attn(q, k, value=src, attn_mask=src_mask,

key_padding_mask=src_key_padding_mask)[0]

# add + norm + feed forward + add + norm

src = src + self.dropout1(src2)

src = self.norm1(src)

src2 = self.linear2(self.dropout(self.activation(self.linear1(src))))

src = src + self.dropout2(src2)

src = self.norm2(src)

return src

def forward_pre(self, src,

src_mask: Optional[Tensor] = None,

src_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None):

src2 = self.norm1(src)

q = k = self.with_pos_embed(src2, pos)

src2 = self.self_attn(q, k, value=src2, attn_mask=src_mask,

key_padding_mask=src_key_padding_mask)[0]

src = src + self.dropout1(src2)

src2 = self.norm2(src)

src2 = self.linear2(self.dropout(self.activation(self.linear1(src2))))

src = src + self.dropout2(src2)

return src

def forward(self, src,

src_mask: Optional[Tensor] = None,

src_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None):

if self.normalize_before: # False

return self.forward_pre(src, src_mask, src_key_padding_mask, pos)

return self.forward_post(src, src_mask, src_key_padding_mask, pos) # 默认执行为什么每个encoder的q和k都是+位置编码的?如果学过transformer的知道,通常都是在transformer的输入加上位置编码,而每个encoder的qkv都是相等的,都是不加位置编码的。而这里先将q和k都会加上位置编码,再用q和k计算相似度,最后和v加权得到更具有全局相关性(增强后)的特征表示。每一层都加上位置编码,每一层全局信息不断加强,最终可以得到最强的全局特征;

为什么q和k+位置编码,而v不需要加上位置编码?因为q和k是用来计算图像特征中各个位置之间计算相似度/相关性的,加上位置编码后计算出来的全局特征相关性更强,而v代表原图像,所以并不需要加位置编码;

tranformer decoder源代码

class TransformerDecoderLayer(nn.Module):

def __init__(self, d_model, nhead, dim_feedforward=2048, dropout=0.1,

activation="relu", normalize_before=False):

super().__init__()

self.self_attn = nn.MultiheadAttention(d_model, nhead, dropout=dropout)

self.multihead_attn = nn.MultiheadAttention(d_model, nhead, dropout=dropout)

# Implementation of Feedforward model

self.linear1 = nn.Linear(d_model, dim_feedforward)

self.dropout = nn.Dropout(dropout)

self.linear2 = nn.Linear(dim_feedforward, d_model)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.norm3 = nn.LayerNorm(d_model)

self.dropout1 = nn.Dropout(dropout)

self.dropout2 = nn.Dropout(dropout)

self.dropout3 = nn.Dropout(dropout)

self.activation = _get_activation_fn(activation)

self.normalize_before = normalize_before

def with_pos_embed(self, tensor, pos: Optional[Tensor]):

return tensor if pos is None else tensor + pos

def forward_post(self, tgt, memory,

tgt_mask: Optional[Tensor] = None,

memory_mask: Optional[Tensor] = None,

tgt_key_padding_mask: Optional[Tensor] = None,

memory_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None,

query_pos: Optional[Tensor] = None):

"""

tgt: 需要预测的目标 query embedding 负责预测物体 用于建模图像当中的物体信息 在每层decoder层中不断的被refine

[100, bs, 256] 和 query_embed形状相同 且全设置为0

memory: [h*w, bs, 256] Encoder输出 具有全局相关性(增强后)的特征表示

tgt_mask: None

memory_mask: None

tgt_key_padding_mask: None

memory_key_padding_mask: [bs, h*w] 记录Encoder输出特征图的每个位置是否是被pad的(True无效 False有效)

pos: [h*w, bs, 256] encoder输出特征图的位置编码

query_pos: [100, bs, 256] query embedding/tgt的位置编码 负责建模物体与物体之间的位置关系 随机初始化的

tgt_mask、memory_mask、tgt_key_padding_mask是防止作弊的 这里都没有使用

"""

# 第一个self-attention的目的:找到图像中物体的信息 -> tgt

# 第一个多头自注意力层:输入qkv都和Encoder无关 都来自于tgt/query embedding

# 通过第一个self-attention 可以不断建模物体与物体之间的关系 可以知道图像当中哪些位置会存在物体 物体信息->tgt

# query embedding + query_pos

q = k = self.with_pos_embed(tgt, query_pos)

# masked multi-head self-attention 计算query embedding的自注意力

tgt2 = self.self_attn(q, k, value=tgt, attn_mask=tgt_mask,

key_padding_mask=tgt_key_padding_mask)[0]

# add + norm

tgt = tgt + self.dropout1(tgt2)

tgt = self.norm1(tgt)

# 第二个self-attention的目的:不断增强encoder的输出特征,将物体的信息不断加入encoder的输出特征中去,更好地表征了图像中的各个物体

# 第二个多头注意力层,也叫Encoder-Decoder self attention:key和value来自Encoder层输出 Query来自Decoder层输入

# 第二个self-attention 可以建模图像 与 物体之间的关系

# 根据上一步得到的tgt作为query 不断的去encoder输出的特征图中去问(q和k计算相似度) 问图像当中的物体在哪里呢?

# 问完之后再将物体的位置信息融合encoder输出的特征图中(和v做运算) 这样我得到的v的特征就有 encoder增强后特征信息 + 物体的位置信息

# query = query embedding + query_pos

# key = encoder输出特征 + 特征位置编码

# value = encoder输出特征

tgt2 = self.multihead_attn(query=self.with_pos_embed(tgt, query_pos),

key=self.with_pos_embed(memory, pos),

value=memory, attn_mask=memory_mask,

key_padding_mask=memory_key_padding_mask)[0]

# ada + norm + Feed Forward + add + norm

tgt = tgt + self.dropout2(tgt2)

tgt = self.norm2(tgt)

tgt2 = self.linear2(self.dropout(self.activation(self.linear1(tgt))))

tgt = tgt + self.dropout3(tgt2)

tgt = self.norm3(tgt)

# [100, bs, 256]

# decoder的输出是第一个self-attention输出特征 + 第二个self-attention输出特征

# 最终的特征:知道图像中物体与物体之间的关系 + encoder增强后的图像特征 + 图像与物体之间的关系

return tgt

def forward_pre(self, tgt, memory,

tgt_mask: Optional[Tensor] = None,

memory_mask: Optional[Tensor] = None,

tgt_key_padding_mask: Optional[Tensor] = None,

memory_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None,

query_pos: Optional[Tensor] = None):

tgt2 = self.norm1(tgt)

q = k = self.with_pos_embed(tgt2, query_pos)

tgt2 = self.self_attn(q, k, value=tgt2, attn_mask=tgt_mask,

key_padding_mask=tgt_key_padding_mask)[0]

tgt = tgt + self.dropout1(tgt2)

tgt2 = self.norm2(tgt)

tgt2 = self.multihead_attn(query=self.with_pos_embed(tgt2, query_pos),

key=self.with_pos_embed(memory, pos),

value=memory, attn_mask=memory_mask,

key_padding_mask=memory_key_padding_mask)[0]

tgt = tgt + self.dropout2(tgt2)

tgt2 = self.norm3(tgt)

tgt2 = self.linear2(self.dropout(self.activation(self.linear1(tgt2))))

tgt = tgt + self.dropout3(tgt2)

return tgt

def forward(self, tgt, memory,

tgt_mask: Optional[Tensor] = None,

memory_mask: Optional[Tensor] = None,

tgt_key_padding_mask: Optional[Tensor] = None,

memory_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None,

query_pos: Optional[Tensor] = None):

if self.normalize_before:

return self.forward_pre(tgt, memory, tgt_mask, memory_mask,

tgt_key_padding_mask, memory_key_padding_mask, pos, query_pos)

return self.forward_post(tgt, memory, tgt_mask, memory_mask,

tgt_key_padding_mask, memory_key_padding_mask, pos, query_pos)

总结decoder

从Encoder的最终输出,我们得到了增强版的图像特征memory, 以及特征的位置信息pos

自定义了图像当中的物体信息tgt, 初始化为全0,以及图像中的物体位置信息query_pos, 随机初始化

第一个self-attention: qk=tgt+query_pos, v=tgt, 计算图像中物体与物体的相似性,负责建模图像中的物体信息,最终得到的tgt1,是增强版的物体信息,这些位置信息包含了物体与物体之间的位置关系。

第二个self-attention: q=tgt+query_pos, k=memory+pos, v=memory, 以物体的信息tgt作为query,去图像特征memory中去问(计算他们的相关性),问图像中物体在哪里呢?问完之后再将物体的位置信息融入到图像特征中去(v),整个过程是负责建模图像特征与物体特征之间的关系,最后得到的是更强的图像特征tgt2,包括encoder输出的增强版的图像特征+物体的位置特征。

最后把tgt1+tgt2=Encoder输出的增强版图像特征+物体信息+物体位置信息,作为decoder的输出;